Encarsia is the first automated framework for injecting realistic hardware bugs. It derives its injection methodology from a comprehensive survey of 177 real-world bugs in open-source CPUs, identifying recurring structural patterns in how these bugs manifest. By replicating these patterns, Encarsia generates comprehensive bug sets that provide controlled evaluation targets for hardware fuzzers. This improves existing evaluation methodologies, which are often based on unproven theories or do not allow for direct comparisons between fuzzers. Our evaluation of CPU fuzzers on Encarsia-injected bugs challenges common assumptions, revealing for example that structural coverage metrics, often advertised as central by many fuzzers, do not enhance bug detection. These findings lay the foundation for more effective fuzzing strategies, making Encarsia a pivotal step toward a standardized, data-driven CPU fuzzing research.

You can access the resulting publication through this link. Encarsia is fully open-source and available on GitHub. It is also permanently archived on Zenodo. Additionally, Encarsia underwent artifact evaluation, during which our results were independently verified for accuracy, earning us the Reproduced badge.

What is Fuzzing?

Fuzzing is an automated testing technique that supplies invalid, unexpected, or random inputs to a system, monitoring its behavior for failures. In CPU fuzzing, this involves executing randomized programs and detecting anomalies such as stalls, assertion failures, or discrepancies against an instruction set simulator (ISS). These anomalies indicate underlying hardware bugs because the CPU’s behavior deviates from the Instruction Set Architecture (ISA) specification.

Fuzzing’s automated and probabilistic nature enables it to scale effectively, making it one of the most widely adopted techniques for detecting hardware bugs. Consequently, numerous research efforts have emerged, each proposing novel fuzzing methodologies with distinct underlying assumptions about their superiority. However, the absence of a standardized evaluation methodology has led to inconsistencies, as competing approaches often yield contradictory conclusions regarding their effectiveness.

Limitations of Current Fuzzer Evaluation Metrics

A robust evaluation methodology is crucial for navigating the vast array of proposed fuzzing techniques and directing research efforts effectively. However, current evaluation methodologies for CPU fuzzers have several limitations, which make it difficult to draw meaningful conclusions regarding fuzzer effectiveness. The two most widely used evaluation metrics are:

1. Coverage: Coverage measures the extent to which a fuzzer exercises a design according to some model. This model could be something simple, like the number of executed instructions, or much more complex, like the number of taken microarchitectural state transitions. However, research in software fuzzing has shown that coverage does not correlate with a fuzzer’s ability to discover bugs. The situation is even more tenuous in hardware fuzzing, where coverage metrics are built upon unproven assumptions without a well-established track record of effectiveness.

2. Discovery of Natural Bugs: This metric evaluates a fuzzer’s performance based on its ability to find unknown real-world bugs. At first glance, this appears to be an ideal way to measure performance, as it directly measures a fuzzer’s ability to find bugs. But in practice, different studies evaluate fuzzers on different CPUs and versions, making direct comparisons impossible. Additionally, as a given CPU design undergoes repeated fuzzing, the easiest-to-detect bugs are found first, leaving only the more complex bugs, and fewer of them, to be found. Consequently, newer fuzzers tested on the same design may appear less effective despite potentially superior capabilities.

Encarsia

To address these limitations, we introduce Encarsia, a novel framework for evaluating CPU fuzzers via automatic bug injection. Encarsia modifies the Register-Transfer Level (RTL) representation of a CPU to insert synthetic bugs, then measures how well a fuzzer is able to detect them. This method provides several advantages over traditional evaluation metrics:

1. A Standardized Test for All Fuzzers: Encarsia provides a consistent set of injected bugs, allowing for direct, fair comparisons across different fuzzers.

2. Ground Truth: Unlike natural bug discovery, Encarsia offers definitive ground truth about the bugs in the design, including their quantity, behavior, and location. This enables the generation of reliable performance metrics, such as the percentage of bugs successfully identified by the fuzzer. Additionally, it allows us to quickly pinpoint the specific types of bugs that the fuzzer struggles with, enabling targeted improvements in future research.

3. Resistant to Overfitting: Encarsia can inject a large number of new diverse bugs on demand. Fuzzers can therefore always be tested against fresh challenges, avoiding the problem of overfitting to a particular set of bugs.

Survey of Bugs in Open Source CPUs

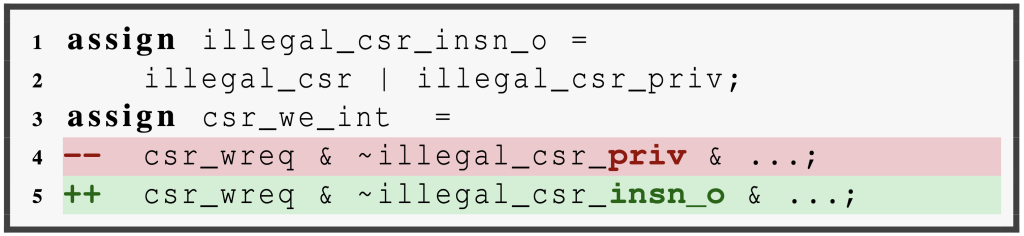

For Encarsia to provide meaningful evaluations, the injected bugs must accurately reflect those found in real hardware. To achieve this, we systematically analyzed 177 bugs in open-source CPUs to identify recurring structural patterns. These patterns can then be automatically replicated in CPUs to inject realistic synthetic bugs. From our survey, we identified two categories of recurring structural bugs:

1. Signal Mix-ups: These refer to the confusion of signals, functions, or other elements in assignments or expressions. They often arise from human error due to signals having similar names, types, or purposes.

2. Broken Conditionals: These refer to faulty conditional statements where the logic fails to evaluate correctly for certain conditions, leading to unintended behavior or incorrect outcomes. Common causes include overlooking exceptional cases or making algorithmic mistakes.

Bug Injecting Transformations

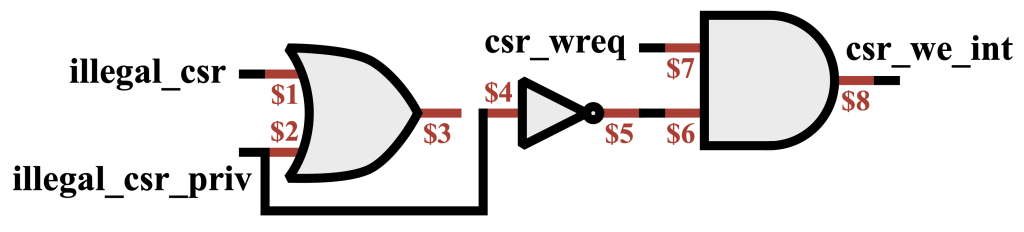

Encarsia leverages custom netlist transformation passes implemented within the Yosys Open Synthesis Suite to replicate recurring patterns from the survey and inject bugs. Yosys processes hardware designs by converting them into its intermediate representation, RTLIL, ensuring uniform handling of HDL syntax. Encarsia operates at this level, modifying the design to introduce realistic bugs. The altered RTLIL is then translated back into HDL, ensuring compatibility with existing CPU toolchains.

1. Signal Mix-ups: Signal mix-ups are introduced by swapping signal connections within the design. Encarsia identifies assignments in the RTLIL representation, each consisting of a driver and a driven signal. To inject a mix-up, Encarsia randomly selects two assignments and swaps their driver signals, mimicking real-world errors from human mistakes. If the swapped signals have different widths Encarsia truncates or sign-extends them to match the required width, ensuring consistency with Verilog’s implicit behavior for mismatched signal widths.

Logical expressions are represented as a network of logic cells interconnected by wires to form complex operations. To make the bug injection process uniform across both assignments and logical expressions, Encarsia introduces intermediate wires between the logic cell ports and their input signals. By following the same procedure for assignments on these intermediate wires, Encarsia effectively swaps the drivers of the cell ports, simulating signal mix-ups in logical expressions.

2. Broken Conditionals: Broken conditionals are created by altering the logic controlling conditional execution. Encarsia first abstracts this logic into a table where each row represents a conditional case, mapping specific input combinations to corresponding outcomes. This uniform representation allows uniform handling of all conditionals. To inject bugs, Encarsia modifies the table by removing cases or corrupting input combinations (e.g., introducing ‘don’t care’ values (X) to simulate overly permissive conditions). The altered conditionals are then reintegrated into the circuitry, mimicking real-world scenarios where designers overlook edge cases or incorrectly formulate conditions.

Fuzzer Evaluation

We evaluated three state-of-the-art CPU fuzzers: DifuzzRTL, ProcessorFuzz, and Cascade. Our results showed that DifuzzRTL and ProcessorFuzz each detected 41.67% of the injected bugs, while Cascade detected 40%. These detection rates indicate that Encarsia effectively injects hard-to-find bugs that can serve as a meaningful benchmark for evaluating CPU fuzzers. Furthermore, the results suggest that fuzzers are still far from exhaustive in their bug-finding capabilities, highlighting the need for further advancements in hardware fuzzing research.

The selection of these three fuzzers was deliberate. DifuzzRTL and ProcessorFuzz share the same program generation infrastructure, allowing us to isolate and evaluate the impact of their differing coverage metrics and bug detection granularity. In contrast, the black-box fuzzer Cascade employs a distinct program generation strategy, enabling a comparative analysis of how different input programs impact bug detection. By strategically comparing the performance of these fuzzers, we identified several key insights into the strengths and weaknesses of different fuzzing approaches:

1. Instruction-Granular Bug Detection: Both DifuzzRTL and ProcessorFuzz, which employ different granularity levels, detected the same set of bugs, indicating that finer granularity does not translate to better bug detection.

2. Effectiveness of Coverage Metrics: Both DifuzzRTL and ProcessorFuzz, detected the same set of bugs with and without coverage feedback, suggesting that hardware-specific structural coverage metrics, often advertised as central by many fuzzers, do not improve bug detection capabilities.

3. Importance of Seed Programs: The choice of seed programs, and program generation in general, plays a crucial role in detecting bugs. Different seed programs led to the discovery of different bugs where other mechanisms like coverage feedback failed.

4. Speed of Bug Detection: Black-box fuzzing, as demonstrated by Cascade, was found to be significantly faster at detecting bugs compared to the other two fuzzers relying on structural coverage feedback. This suggests that the overhead of coverage feedback strategies slows down the bug discovery process, potentially leading to missed bugs in time-bounded fuzzing campaigns.

5. Incomplete ISA Testing: Fuzzers do not systematically test the full Instruction Set Architecture (ISA), causing certain bugs, particularly those related to specific instructions or ISA extensions, to go undetected.

6. Filtering Mechanisms for Known Bugs: Existing mechanisms for filtering known bugs are too broad, leading to the exclusion of test inputs that could potentially uncover new bugs. More targeted filtering approaches are needed to avoid masking unknown bugs while still filtering out known issues.

7. Diverse Source Values: Values seemingly coming from the same source according to the ISA may originate from different components within the CPU. Some fuzzers fail to cover all possible instructions, assuming they use the same source value, leading to missed bugs.

8. Microarchitectural Corner Cases: Fuzzers fail to detect bugs arising from corner cases in the microarchitecture, such as a full buffer causing a value to be discarded, leading to incorrect results. A more systematic approach to covering all possible microarchitectural execution paths is needed to improve bug detection.

These insights highlight the need for more principled approaches in the development of hardware fuzzers. By addressing the identified challenges, future research can significantly improve the effectiveness of hardware fuzzing, leading to more robust and reliable CPU designs.

Paper and code:

Encarsia will be presented at USENIX Security ‘25, and is readily open source here. We hope you find it useful in evaluating your new shiny hardware fuzzer!

Frequently Asked Questions

Only around 40% of the bugs were found by the fuzzers. Does this imply that fuzzers are ineffective?

No, this doesn’t imply that fuzzers are ineffective. Some of the issues we identified, such as untested ISA extensions, are relatively easy to fix and could lead to significant improvements in bug detection. For more complex challenges, the insights gained from Encarsia, along with our recommendations for future work, can guide the development of more robust fuzzing techniques in the long term.

I am skeptical that all bugs can be traced back to just two syntactic transformations. Is it possible to add more bug-injecting transformations into Encarsia?

All 177 bugs identified in our survey can, in fact, be traced back to just two core transformations: signal mix-ups and broken conditionals. These transformations were intentionally designed to generate a broad spectrum of bugs for providing a comprehensive fuzzer evaluation. While additional bug-injecting transformations would essentially be subsets of these two fundamental types, they could still be valuable for targeting specific classes of bugs. Such transformations can be added by creating a new injection pass in Yosys and integrating it into Encarsia’s main script.

You argue that coverage does not significantly contribute to bug detection in fuzzing. How does this align with established research on software fuzzing, which often emphasizes the importance of coverage?

We are not suggesting that coverage cannot help fuzzers identify bugs in general. Rather, we argue that current structural coverage metrics are insufficient in this regard. While exploring the reasons behind this is beyond the scope of Encarsia, we hypothesize that the complex, interconnected, and parallel nature of modern hardware leads to increased coverage for nearly any input, reducing the metrics effectiveness in guiding bug discovery.

Can Encarsia be used to inject bugs into other CPUs or evaluate additional fuzzers?

Yes, Encarsia is designed to be extensible and user-friendly. For further information on how to extend Encarsia, please refer to the documentation.

How can I contribute to Encarsia?

Just open a pull request on github and we will have a look.

Acknowledgements

This work was supported by the Swiss State Secretariat for Education, Research and Innovation under contract number MB22.00057 (ERC-StG PROMISE).